SambaNova Launches The World's Fastest AI Platform

In an exciting development for AI and machine learning, SambaNova Systems has announced the launch of SambaNova Cloud, the world’s fastest AI inference platform. Leveraging the power of its SN40L AI chip, SambaNova Cloud delivers unmatched speed and precision, running the groundbreaking Llama 3.1 405B model at an impressive 132 tokens per second (t/s). The platform is available to developers today, offering a powerful solution for building generative AI applications with both the largest and most capable open-source models.

The Power of Llama 3.1 in SambaNova Cloud

Meta’s Llama 3.1 models have gained significant attention this year, with versions ranging from 8B to 405B parameters. The 405B model, in particular, is a breakthrough for open-source AI, offering a serious alternative to the proprietary models from industry giants like OpenAI, Anthropic, and Google. However, deploying such a large model has always been a challenge due to its size, complexity, and the associated speed trade-offs.

This is where SambaNova comes in. According to CEO Rodrigo Liang, SambaNova is the only platform running Llama 3.1 405B at full precision and at 132 t/s, a feat that competitors using Nvidia GPUs cannot match. By using the custom-built SN40L AI chip, SambaNova reduces the cost and complexity of deploying massive models like Llama 3.1 405B while delivering faster speeds than ever before.

For enterprises, this means unprecedented flexibility. “Customers want versatility,” says Liang. “They need the 70B model at lightning-fast speeds for agentic AI workflows, and the 405B model for the highest fidelity and best results. Only SambaNova Cloud offers both today.”

Unmatched Speed for AI Developers

Developers can now access SambaNova Cloud through a free API, allowing them to build and deploy applications with world-record speeds. In addition to running the 405B model at 132 t/s, SambaNova Cloud also supports the smaller Llama 3.1 70B model at 461 tokens per second, making it ideal for agentic AI systems that require high-speed, real-time responses. These speeds are essential for applications needing fast token generation, such as conversational agents, real-time analytics, and autonomous systems.

Andrew Ng, a renowned AI expert and founder of DeepLearning.AI, praised the technical achievements of SambaNova Cloud, stating that running the 405B model at 16-bit precision and over 100 t/s is a game-changer for developers working with large language models (LLMs).

Independent Validation of SambaNova's Speed

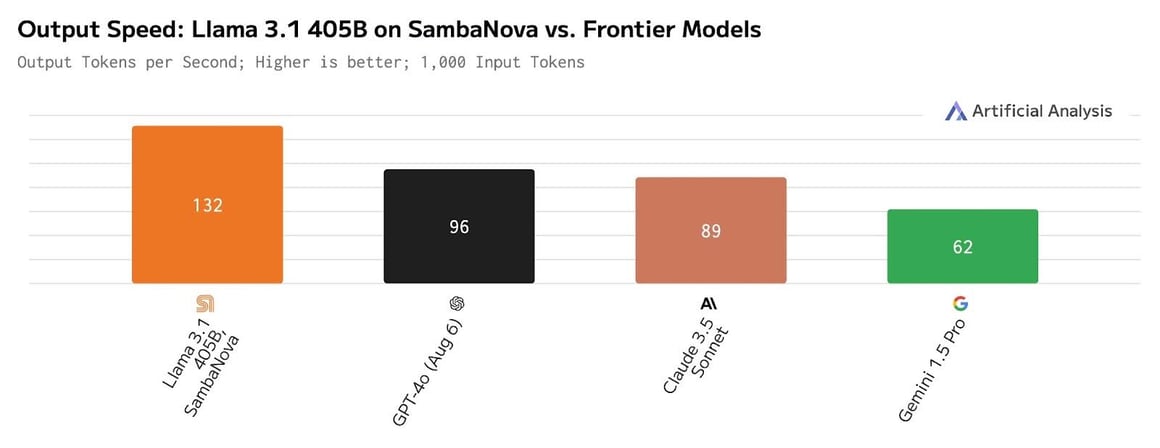

The platform’s impressive performance has been independently verified. According to George Cameron, Co-Founder of Artificial Analysis, SambaNova Cloud’s Llama 3.1 405B endpoint achieved a record speed of 132 tokens per second, outperforming other frontier models from OpenAI, Anthropic, and Google. This speed makes it the best option for AI use cases where rapid token processing is critical.

A Platform for Agentic AI and More

SambaNova Cloud isn’t just about speed—it’s designed to support a wide range of AI applications. The 70B model, in particular, is ideal for agentic AI workflows, where systems need to interact and collaborate to complete complex tasks. Companies like Bigtincan and Blackbox AI have already adopted SambaNova Cloud to power their AI-driven solutions, citing up to a 300% improvement in efficiency for their platforms.

How to Get Started with SambaNova Cloud

SambaNova Cloud offers multiple tiers for different needs:

- Free Tier: Open to all developers today, providing free API access to Llama 3.1 models.

- Developer Tier: Launching by the end of 2024, this tier will offer higher rate limits and access to the 8B, 70B, and 405B models for advanced development.

- Enterprise Tier: Available now, this tier is designed for large-scale production workloads with the highest rate limits and scalability.

SambaNova’s SN40L Chip: The Heart of the Platform

At the core of SambaNova Cloud’s performance is the SN40L AI chip. Its patented dataflow architecture and three-tier memory design allow it to run AI models faster and more efficiently than traditional GPU-based solutions. This unique hardware accelerates inference speeds, making SambaNova Cloud the fastest platform for AI developers today.

SambaNova: Pioneering AI for the Enterprise

Founded in 2017 and headquartered in Palo Alto, SambaNova Systems was created by industry veterans from Sun/Oracle and Stanford University. The company is backed by top-tier investors, including SoftBank, BlackRock, and Intel Capital, and is committed to bringing cutting-edge AI technology to the enterprise. SambaNova’s cloud platform and custom AI chips enable organizations to quickly deploy state-of-the-art generative AI capabilities, transforming how businesses use AI.

For developers and enterprises looking to leverage the power of open-source models like Llama 3.1 with unmatched speed and precision, SambaNova Cloud is available now. Visit SambaNova’s website or follow them on LinkedIn and X to learn more.

To check out the full report, their blog post, click here.

Subscribe to Kavour

Get the latest posts delivered right to your inbox