Introducing ARIA:The First Open Multimodal Native MoE Model

Rhymes.ai has launched ARIA, the first open multimodal native mixture of experts (MoE) model, designed to enhance AI capabilities across various applications. With its innovative architecture and advanced features, ARIA aims to set a new standard in the realm of multimodal AI, enabling more efficient processing of diverse data types.

The field of artificial intelligence is rapidly evolving, with new models emerging to tackle complex tasks involving multiple data modalities. Rhymes.ai has taken a significant step forward with the introduction of ARIA, the first open multimodal native mixture of experts (MoE) model. This groundbreaking model is engineered to process and integrate various types of data—such as text, images, and audio—allowing for more sophisticated AI applications.

Multimodal models are essential for understanding and generating content that reflects the complexity of real-world information. By combining different forms of data, these models can provide richer insights and more accurate outputs. ARIA's architecture leverages the strengths of MoE technology to optimize resource allocation, enabling it to handle diverse tasks efficiently while maintaining high performance.

ARIA boasts several innovative features that enhance its functionality and usability. It utilizes a mixture of experts architecture that allows for dynamic routing of inputs to specialized sub-models, optimizing computational resources based on task requirements. This design not only improves efficiency but also enhances the model's ability to learn from various data types. Additionally, ARIA supports seamless integration with existing frameworks, making it easier for developers to incorporate its capabilities into their applications. The model is open-source, promoting collaboration and innovation within the AI community while ensuring transparency in its development process.

The versatility of ARIA opens up numerous possibilities for its application across different domains. In healthcare, it can analyze patient records alongside medical images to provide comprehensive insights for diagnosis and treatment planning. In creative industries, ARIA can assist in generating multimedia content by integrating text descriptions with visual elements, enhancing storytelling capabilities. Furthermore, its ability to process audio data allows for advancements in speech recognition and natural language understanding, making it a valuable tool for developers working on voice-activated applications.

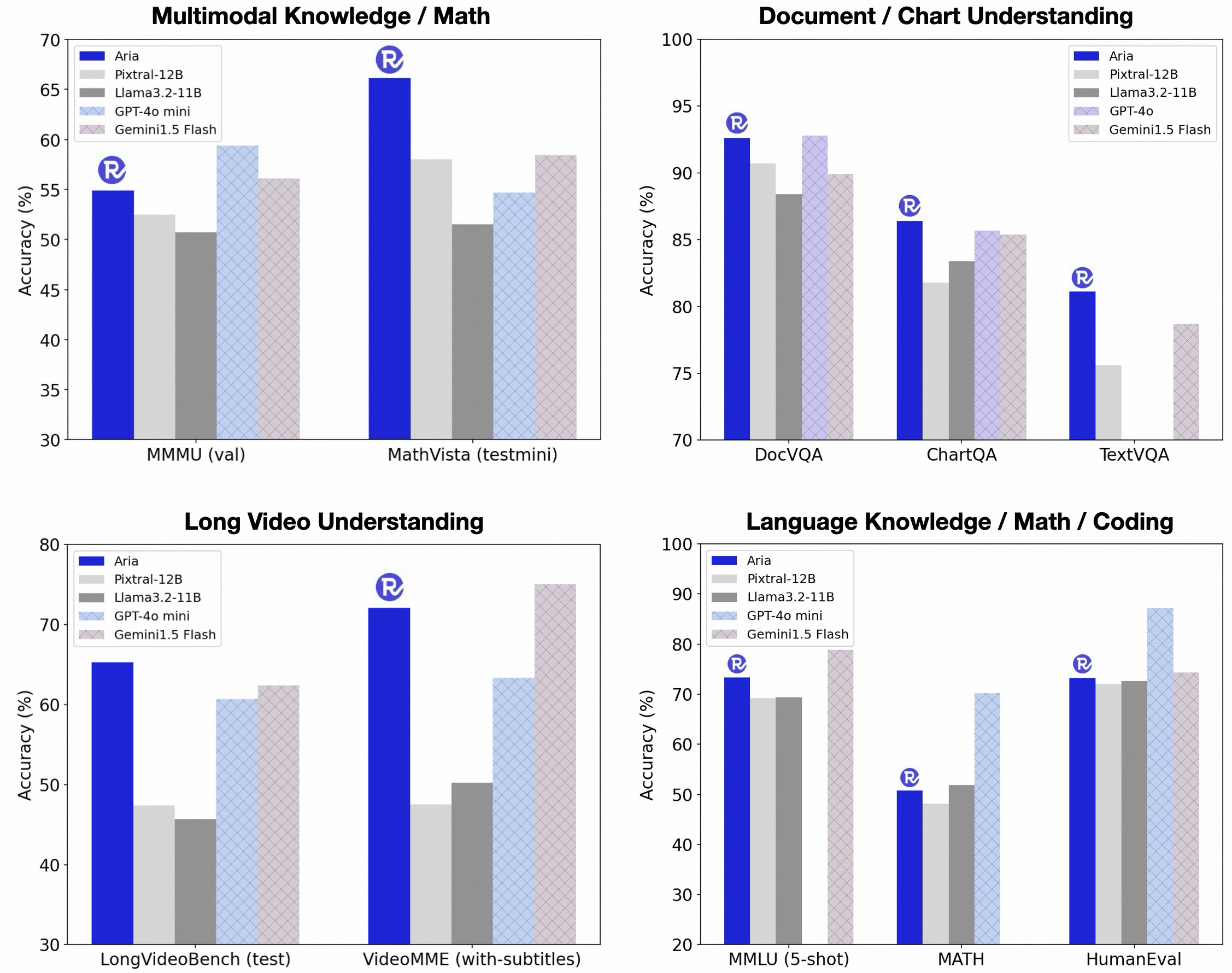

Initial tests have shown that ARIA outperforms many existing models in various benchmarks related to multimodal tasks. Its unique architecture allows it to achieve higher accuracy rates while reducing latency during processing. This performance boost is particularly beneficial in real-time applications where quick responses are crucial.

As AI continues to evolve, the demand for models that can seamlessly integrate multiple data types will only increase. ARIA represents a significant advancement in this area, providing a robust framework for future developments in multimodal AI. Rhymes.ai is committed to refining ARIA further based on user feedback and ongoing research, ensuring that it remains at the forefront of technological innovation.

The launch of ARIA marks a pivotal moment in the development of multimodal AI models. By combining advanced features with an open-source approach, Rhymes.ai is paving the way for more efficient and effective AI solutions across various industries. As developers and researchers explore the capabilities of ARIA, the potential for groundbreaking applications in fields such as healthcare, creative arts, and beyond becomes increasingly apparent.

For more information about ARIA and its features, visit Rhymes.ai's official blog post where you can real case scenarios including prompts and results.

Subscribe to Kavour

Get the latest posts delivered right to your inbox