Enhancing AI Performance with Contextual Retrieval

This article explores the innovative approach of Contextual Retrieval, a method that enhances the performance of AI models by improving information retrieval accuracy. By combining contextual embeddings and BM25 techniques, this method significantly reduces retrieval failures, leading to better AI responses in various applications.

Introduction

In the realm of artificial intelligence, particularly in applications like customer support and legal analysis, having access to relevant background knowledge is crucial. Traditional methods of enhancing AI knowledge often involve Retrieval-Augmented Generation (RAG), which retrieves pertinent information from a knowledge base. However, conventional RAG solutions frequently fail to preserve context, resulting in suboptimal retrieval outcomes.

The Need for Contextual Retrieval

To address the limitations of traditional RAG, a new method called "Contextual Retrieval" has been introduced. This technique employs two sub-methods: Contextual Embeddings and Contextual Best Match 25 (BM25). The implementation of these methods has shown a remarkable reduction in retrieval failures—by 49% when combined with reranking, improving overall performance significantly.

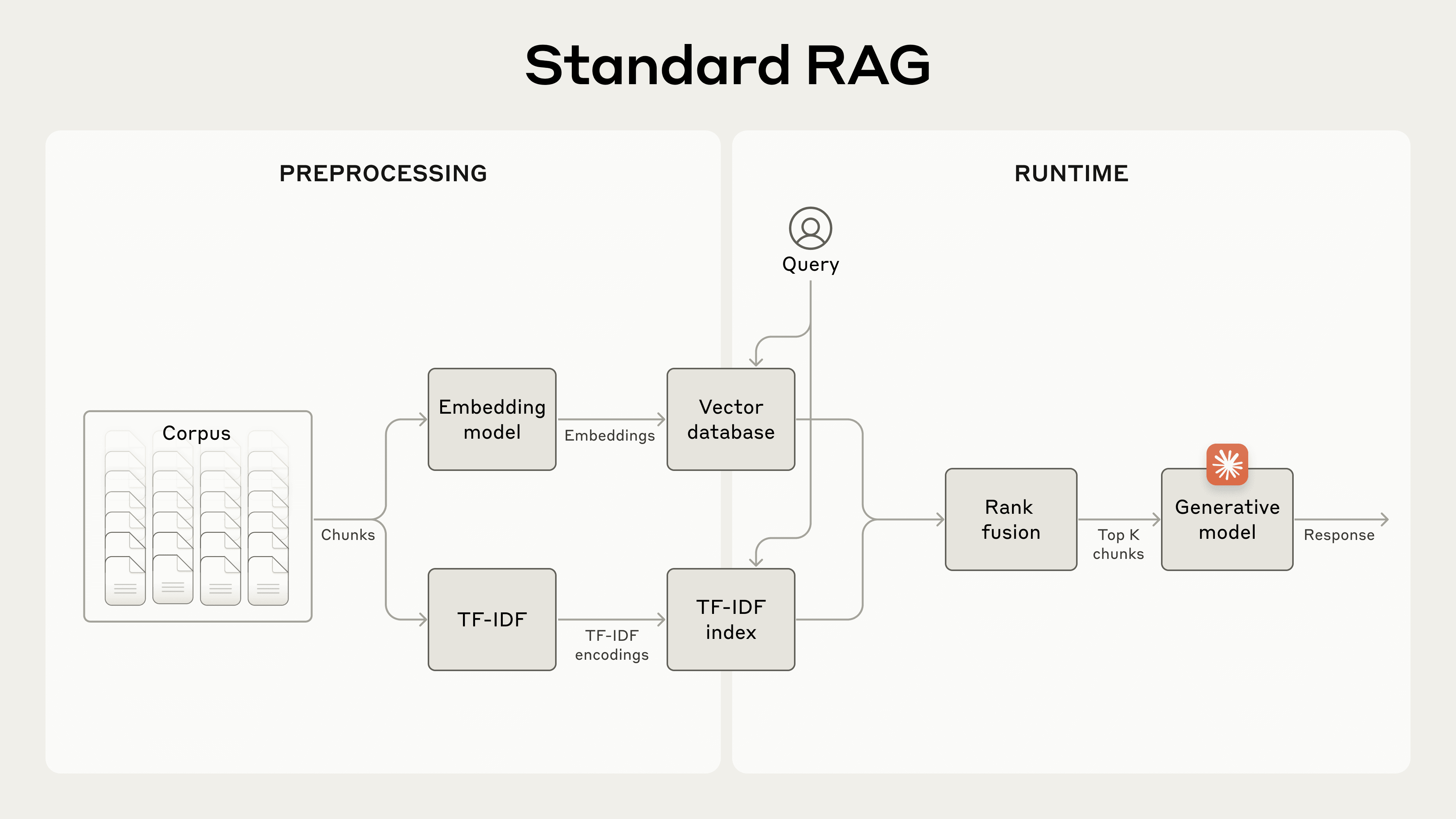

Understanding RAG

RAG typically involves several steps to preprocess a knowledge base:

- Breaking down the knowledge base into smaller text chunks.

- Using an embedding model to convert these chunks into vector embeddings.

- Storing these embeddings in a vector database for semantic searching.

While embedding models excel at capturing semantic relationships, they may overlook exact matches. This is where BM25 comes into play, providing precise lexical matching capabilities that can identify specific terms or phrases effectively.

The Contextual Retrieval Method

Contextual Retrieval enhances traditional methods by adding chunk-specific context to each piece of information before embedding it. For instance, a financial report chunk might be transformed from:

original_chunk = "The company's revenue grew by 3% over the previous quarter."to:

contextualized_chunk = "This chunk is from an SEC filing on ACME Corp's performance in Q2 2023; the previous quarter's revenue was $314 million. The company's revenue grew by 3% over the previous quarter."Implementing Contextual Retrieval

The implementation of Contextual Retrieval can be automated using AI models like Claude (you can find Anthropic's solution in the cookbook procided). By instructing Claude to generate concise context for each chunk, developers can efficiently prepare their knowledge bases for improved retrieval accuracy.

Cost-Effectiveness with Prompt Caching

One of the significant advantages of using Claude is the ability to cache prompts, which reduces both latency and costs associated with generating contextualized chunks. This feature allows developers to reference previously cached content rather than reloading entire documents for every chunk.

Performance Improvements

Experimental results indicate that Contextual Embeddings alone can reduce retrieval failure rates significantly. When combined with BM25, the failure rate decreases even further. Notably:

- Contextual Embeddings reduced top-20-chunk retrieval failure by 35%.

- The combination of both techniques reduced failure rates by 49%.

Reranking for Enhanced Accuracy

A final layer of optimization can be achieved through reranking techniques. By scoring and selecting the most relevant chunks based on user queries, reranking ensures that only the best responses are generated, further enhancing performance and reducing costs.

The advancements brought about by Contextual Retrieval represent a significant leap in AI information retrieval capabilities. By integrating contextual embeddings with BM25 and implementing reranking strategies, developers can maximize their AI systems' performance across various applications. The use of these methods is encouraged for anyone working with extensive knowledge bases to unlock new levels of efficiency and accuracy. To read full article about implementations and more check out Anthropic's blog post.

Subscribe to Kavour

Get the latest posts delivered right to your inbox