Advancing AI Reasoning - A Look at OpenAI’s o1 Model

Artificial intelligence notes progress almost daily in architecture as well as in problem-solving and reasoning, but OpenAI’s latest model, o1, marks a significant leap forward. Designed to excel in complex reasoning tasks, the o1 model has achieved remarkable results in competitive programming, academic benchmarks, and real-world applications. This article explores the cutting-edge capabilities of o1, from its performance in math and science challenges to its proficiency in coding and human-like reasoning.

The Power of o1: Breaking Benchmarks and Rivaling Experts

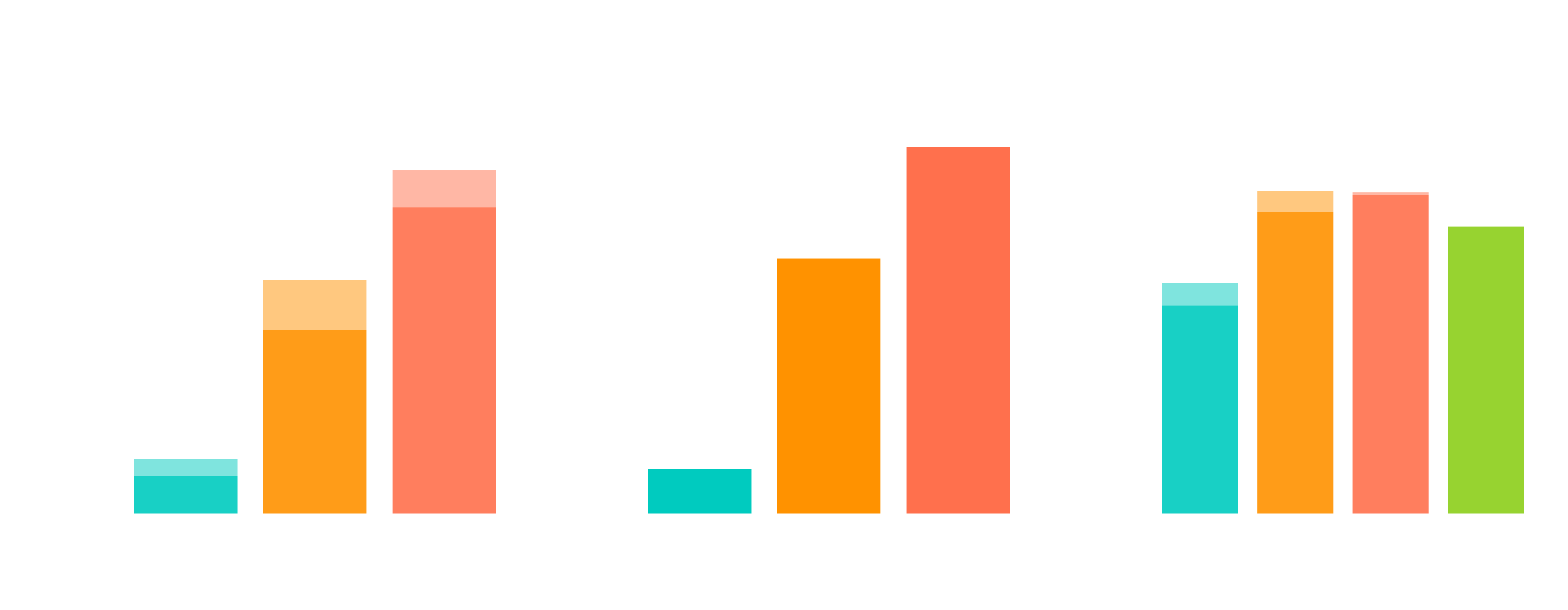

OpenAI's o1 model outperforms its predecessors and rivals human experts in various fields, particularly in reasoning-heavy tasks. In math, for instance, o1 solved 74% of problems from the prestigious AIME exam, placing it among the top 500 high school students in the U.S. With additional techniques like consensus sampling and re-ranking, its accuracy reached an impressive 93%. This is a significant improvement over earlier models like GPT-4o, which only solved 12% of the same problems.

In science, o1 excelled on the GPQA diamond benchmark, designed to test advanced knowledge in chemistry, physics, and biology. When compared to PhD experts, o1 surpassed their performance, becoming the first AI model to do so on this difficult test. However, OpenAI clarifies that o1’s results do not imply it is superior to PhDs across the board, but it does outshine them in certain types of problem-solving and that it is expected to solve some problems that a PhD would be expected to solve.

The model’s coding abilities are equally impressive. In the 2024 International Olympiad in Informatics (IOI), o1 ranked in the 49th percentile among human competitors. When relaxed constraints allowed for 10,000 submissions per problem, the model scored above the gold medal threshold, showcasing its problem-solving agility in algorithmic challenges. Additionally, in simulated programming contests on Codeforces, o1 achieved an Elo rating of 1807, outperforming 93% of human participants, a remarkable feat in competitive programming.

Reinforcement Learning and Chain of Thought: The Key to o1's Success

Central to o1's reasoning advancements is its ability to leverage a "chain of thought" process, similar to how humans think through complex problems. This process allows the model to break down difficult questions, refine its approaches, and correct mistakes over time. Reinforcement learning plays a crucial role in this, teaching the model how to think productively, which leads to consistent improvements in both training and test-time performance.

This "chain of thought" approach significantly improves the model's reasoning capabilities across tasks such as math, data analysis, and programming. For example, in open-ended evaluations, human trainers consistently preferred o1's responses over GPT-4o in areas that required deep reasoning. However, o1 is not perfect in all domains, as it struggled with some natural language tasks, suggesting it may not yet be universally applicable.

Safety and Alignment: A Responsible Approach to AI Development

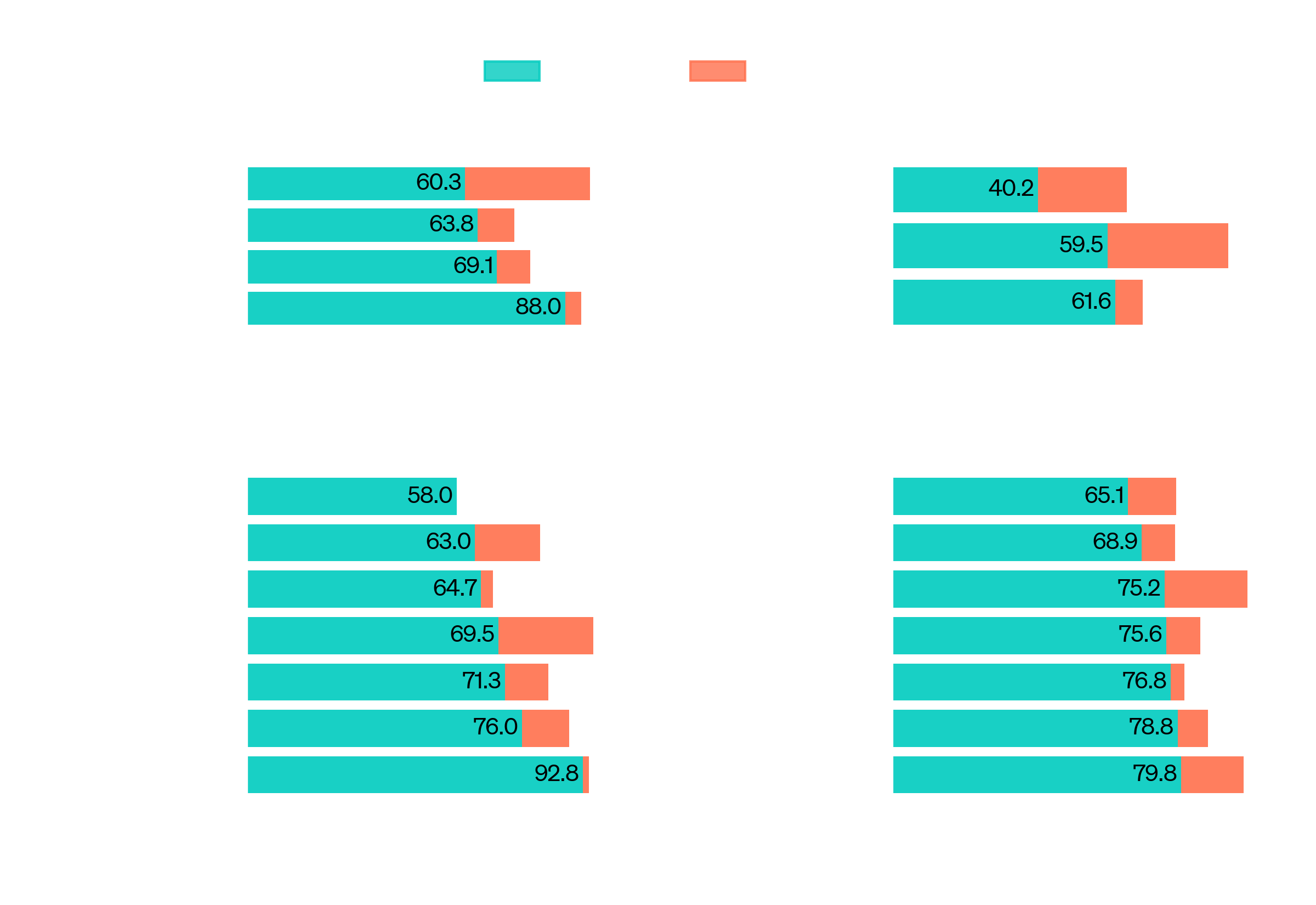

Safety is a critical consideration in AI development, and OpenAI has integrated safety principles into o1’s chain of thought reasoning. By teaching the model safety rules and embedding these principles into its reasoning process, o1 has shown increased robustness in safety evaluations. It performed exceptionally well in tests designed to assess its resistance to harmful outputs, surpassing previous models like GPT-4o.

| Metric | GPT-4o | o1-preview |

|---|---|---|

| % Safe completions on harmful prompts Standard | 0.990 | 0.995 |

| % Safe completions on harmful prompts Challenging: jailbreaks & edge cases | 0.714 | 0.934 |

| ↳ Harassment (severe) | 0.845 | 0.900 |

| ↳ Exploitative sexual content | 0.483 | 0.949 |

| ↳ Sexual content involving minors | 0.707 | 0.931 |

| ↳ Advice about non-violent wrongdoing | 0.688 | 0.961 |

| ↳ Advice about violent wrongdoing | 0.778 | 0.963 |

| % Safe completions for top 200 with highest Moderation API scores per category in WildChat (Zhao, et al. 2024) | 0.945 | 0.971 |

| Goodness@0.1 StrongREJECT jailbreak eval (Souly et al. 2024) | 0.220 | 0.840 |

| Human sourced jailbreak eval | 0.770 | 0.960 |

| % Compliance on internal benign edge cases “not over-refusal” | 0.910 | 0.930 |

| % Compliance on benign edge cases in XSTest “not over-refusal” (Röttger, et al. 2023) | 0.924 | 0.976 |

OpenAI has also introduced the concept of a "hidden chain of thought," allowing for internal monitoring of the model’s thought processes without revealing them to users. This strategy provides a balance between transparency and safety, enabling developers to observe the model’s reasoning while preventing potential misuse or manipulation.

OpenAI’s o1 model sets a new standard for AI reasoning and problem-solving. By outperforming human experts in math, science, and coding benchmarks, and introducing innovative techniques like chain of thought reasoning, o1 demonstrates its potential to revolutionize a variety of fields. While still under development, this early release marks a significant step toward more aligned and capable AI systems, offering exciting possibilities for future applications in science, coding, and beyond.

As OpenAI continues to iterate on the o1 model, the advancements in reasoning and alignment are expected to unlock even more groundbreaking use cases. To read full article go to oficial blog post.</a</p>

Subscribe to Kavour

Get the latest posts delivered right to your inbox